Executive Summary

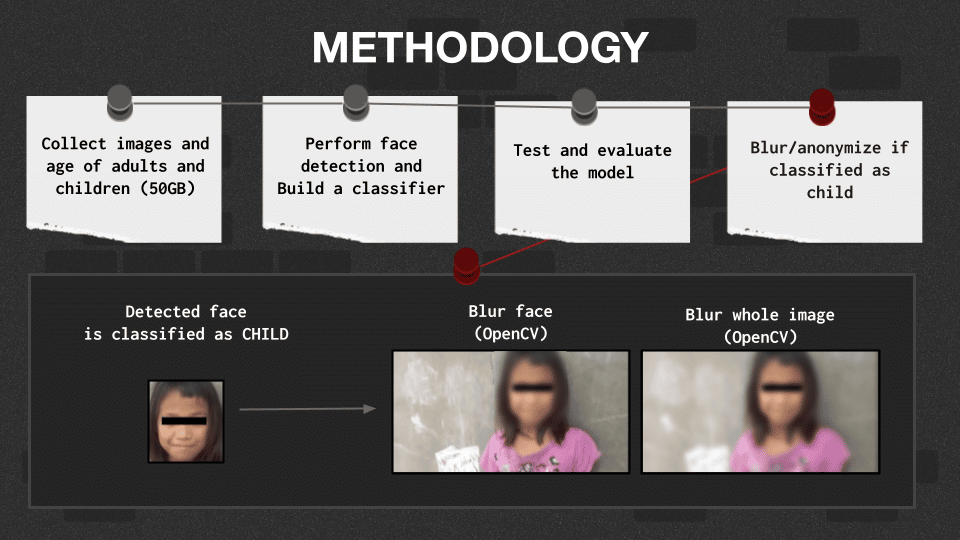

The internet has changed the way people communicate. With its advent, people all over the world have immediate access to family, relatives, friends, and sometimes complete strangers. However, the internet was not built with the safety of children in mind. Young Filipinos have become more active in social media which lets them be exposed to various groomers. Some unfortunate ones have also been locked down with their own traffickers. Given this perennial challenge, the researchers asked, “How can we leverage big data techniques to detect and censor children in images and live streaming sites?”. The researchers used two (2) primary datasets for this study. First is the Flickr-Faces-HQ (FFHQ) Images which contains high-quality .png images of around 70,000 faces. The second is the FFHQ Features Dataset which presents various information for each face in FFHQ Images. Overall, the dataset that was processed is over 50GiB. FFHQ data was extracted and prepared in virtual machines using AWS, and implement the model through face detection and cropping, application of the child classifier, then face or whole-body blurring if the face detected is that of a child. The chosen metric in assessing model performance is “recall”. This metric essentially answers, “How much of the children’s images have been correctly identified?” In addition, Recall helps emphasize the cost of false negatives. It would be costly for the user of the model to misclassify a picture of a child as an adult. Following a cross-validation pipeline, the logistic regression model (or “child classifier” model) achieved a test recall score of 84.5%. This means that the model can correctly identify an image of a child 8 out of 10 times. Online child abuse has been increasing at an exponential rate and there is still no end in sight. Therefore, there is an urgent need to implement measures to help protect them. As demonstrated by the researchers, it is possible to employ big data techniques and machine learning to help protect these children by identifying and obscuring their faces.