Abstract

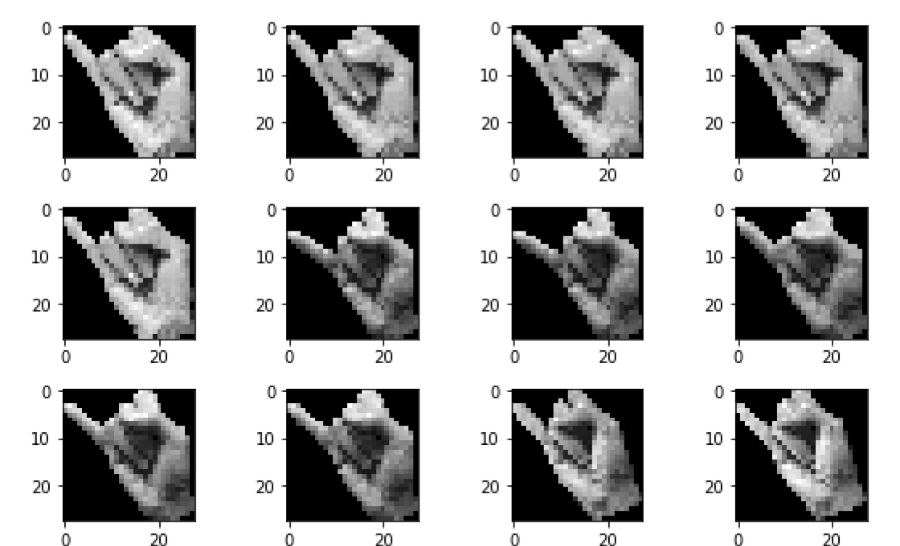

Across the globe, there are over 250 million people estimated to have disabled hearing impairment. Given the daily struggles these people undergo, this study aims to address this question: ‘Can we translate American Sign Language images to English text?’. The idea is to pave the way for an inclusive community–A World Without Borders. By facilitating exchanges between the hearing impaired and the rest of the world, barriers to communication will be broken down. The methodology involves processing American Sign Language images of the alphabet letters, A-Z.

Initial results indicate 83% of all images were correctly classified using Random Forest. To improve on the model, we run on the assumption that people intend to form whole words when making hand sign languages. Hence, we apply auto-correction to the predicted images and increased the accuracy to 99%. In line with our results, we recommend that an application capable of translating sign languages to text in real time be developed. We also propose that future researchers consider introducing the digits 0-9 and other body gestures to further add value.